AI agents are here, and they aren’t just calling APIs or pushing content. They’re thinking. They’re deciding. They’re planning and delegating. And they’re doing it across your infrastructure, your services, and your traffic management stack.

The problem? Most of our systems have no idea what they’re doing.

Traditional observability was built to track services. You know, real things: requests, responses, latencies, paths. It wasn’t built to understand why a request happened—only that it did. But now your traffic might originate from a recursive reasoning loop inside an agent, and all you’ll see in your logs is a POST to/search with a payload and a 200.

That’s not observability. That’s opacity.

If we’re going to operationalize AI agents safely, especially in production environments, then traffic management systems will be an integral component of your strategy.

But first, observability has to catch up. And it starts with three critical gaps.

1. Log decisions, not just requests

Agents don’t just respond. They decide. They evaluate. They defer, re-route, escalate, or call tools. And if you’re only logging the HTTP layer, you’re missing the part that actually matters, which is why the thing happened.

You need structured logs that capture the agent’s intent, the selected action, the evaluated alternatives, and the outcome. You need decision paths. Not just status codes.

What to do:

- Extend log formats to include semantic fields like

intent, action taken, confidence score,andpolicy constraint. - Tag decisions by originating agent and trace them end-to-end across tool calls.

2. Track semantics, not just paths

Two POSTs to the same endpoint can mean wildly different things when they’re coming from AI agents. One might be a fact-check request, the other a content generation trigger. The path is identical, but the purpose, the context, and the risk are not.

That means semantic tagging has to become a first-class citizen in routing and observability.

What to do:

- Use inline inspection (payloads or headers) to extract intent tags like

summarize, verify_identity, or generate. - Feed those tags into routing, rate limiting, and auth decisions. Don’t treat all POSTs equally.

3. Monitor behavior over time

Agents don’t behave like stateless clients. They evolve. They try again. They stall. They adapt. That means observability systems need to move beyond single-request traces and start building behavior profiles.

You should know what "normal" looks like for an agent: how many retries it does, what tools it uses, how long its tasks take. And when that changes? You should know that too.

What to do:

- Track rolling metrics for each agent identity: retries, task depth, tool usage.

- Alert on anomalies: loops, latency drift, tool thrashing.

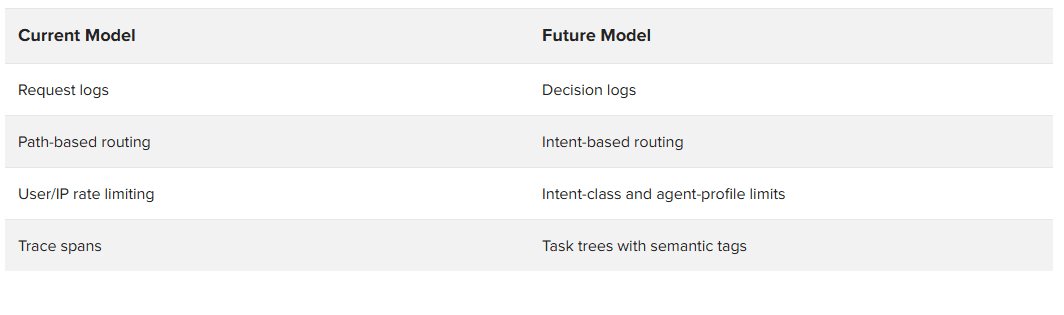

What this means for traffic management

All of this has real implications for the systems we trust to steer, secure, and scale our applications:

AI agents aren’t users. They’re not clients. They’re not even services in the traditional sense. They’re autonomous workflows. And until we treat them that way—starting with observability—we’re going to keep flying blind.

So no, your traffic isn’t just traffic anymore. And your infrastructure shouldn’t act like it is.

Start logging like an agent’s watching. Because it probably is.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.