AI is no longer experimental. It’s in production—powering chatbots, informing decisions, and reshaping how enterprises operate. But there’s a blind spot hiding in plain sight: runtime.

While training grabs headlines, runtime is where AI comes to life. It’s where models engage with real users, data, and decisions. It’s also where the most significant security risks arise.

And here’s the reality: most enterprises engage with AI at the runtime layer, not during training. That’s where adoption is accelerating—and where security too often lags behind.

From Black Boxes to Attack Surfaces

AI runtime isn’t just a deployment phase. It’s a new attack surface—one that’s active, unpredictable, and exposed.

Here’s why:

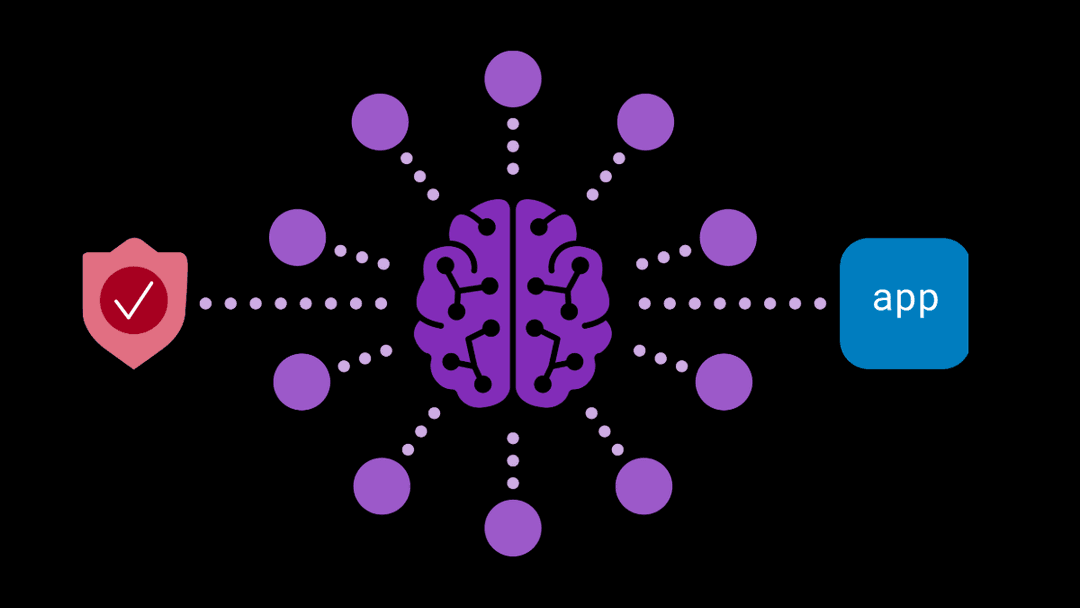

- Inputs are unpredictable. Every prompt to an AI model, application, or agent is a potential exploit. Attackers can weaponize seemingly innocent user inputs through prompt injections, jailbreaks, or malicious context chaining.

- Outputs are vulnerable. AI-generated content can leak proprietary data, expose sensitive customer information, or generate toxic or non-compliant responses—all in seconds, and often without detection.

- Models don’t forget. Some language models retain details from their training data. This unintended memorization can result in data exfiltration through casual prompts.

- Behavior is opaque. Frontier models are complex and often behave like black boxes, with no visibility into their workings. This unpredictability makes it harder to forecast how they’ll respond in edge cases or while under attack.

- Security teams lack control. Whether using APIs from model providers or hosting open-source models in-house, most enterprises don’t have fine-grained tools to enforce policy, monitor activity, or block attacks in real time.

The bottom line? Runtime is not passive. It’s dynamic, continuous, and exposed to live user inputs—making it a prime target for adversaries and a critical gap in most security programs.

A New Layer of Enterprise AI Protection

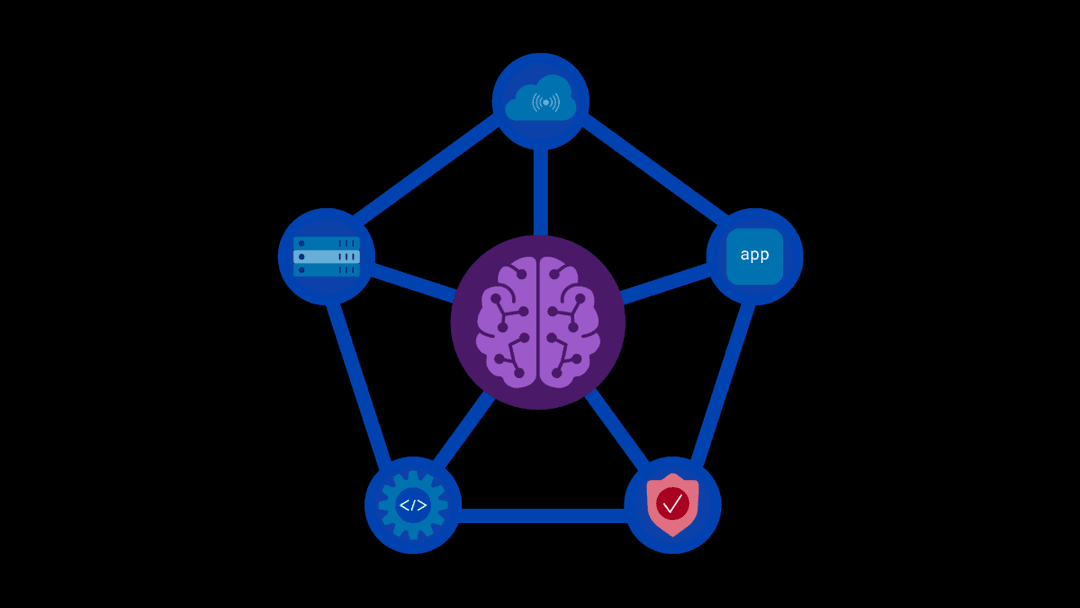

Securing AI at runtime isn’t a matter of a single control or tool—it requires a layered, adaptive perimeter built specifically for how AI systems are used in the real world.

That begins with enforcing policy at the point of interaction. Enterprises need mechanisms to inspect every prompt and every response—evaluating for prompt injection, harmful content, data leakage, and other context-specific risks. These runtime guardrails must be dynamic, updating in response to emerging threats and evolving compliance requirements, and all without disrupting business operations.

But securing runtime isn’t just about reacting, it’s also about anticipating. As attackers develop novel strategies to exploit model behavior, organizations need offensive capabilities that red team AI systems. Using advanced adversarial techniques designed specifically for AI ensures that defenses remain ahead of what’s possible, not just what’s known.

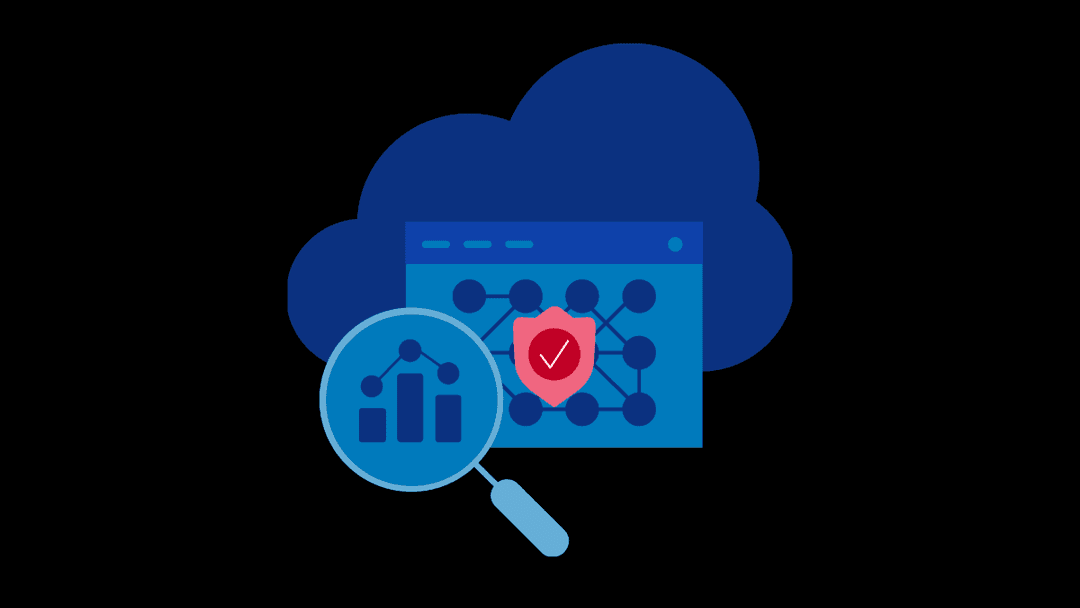

Finally, organizations need visibility. Without insight into how AI is being used—what models are being accessed, by whom, for what purpose—there’s no way to govern AI usage effectively. Observability across the runtime layer is foundational to both security and trust.

Taken together, these capabilities form a new kind of security layer—purpose-built for runtime, and essential for any enterprise scaling GenAI to do so with confidence.

From Insight to Action

Runtime security is no longer a nice-to-have, it’s a strategic imperative. For security leaders in any organization, the question is no longer if runtime should be secured. It’s how soon.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

New 7.0 release of F5 Distributed Cloud Services accelerates F5 ADSP adoption

Our recent 7.0 release is both a major step and strategic milestone in our journey to deliver the connectivity, security, and observability fabric that our customers need.

F5 provides enhanced protections against React vulnerabilities

Developers and organizations using React in their applications should immediately evaluate their systems as exploitation of this vulnerability could lead to compromise of affected systems.